About | Research Projects | Creative Practice | Teaching | Awards & Grants

During my professional and academic career, I have developed and led interdisciplinary research at the intersection of machine learning, human-centered design, and co-creative learning while critically examining their societal implications. In this research statement I describe the trajectory of my work in three inter-related areas: (a) Multi-Modal, Cooperative and Human-Centered AI, (b) Inclusive, Trustworthy and Responsible AI in Society, and (c) Researching Co-Creative Learning and Expression. These overlapping themes in my work continue to shape my vision for intersectional, collaborative, and transdisciplinary approaches to societally engaged research that I wish to pursue in the future.

A. Multi-Modal, Cooperative and Human-Centered AI

During my masters and doctoral research in the Future of Computing Environments (FCE) group at the Graphics Visualization and Usability (GVU) Lab at Georgia Institute of Technology, as well as the Speech Interfaces and Human Dynamics groups in the MIT Media Lab at Massachusetts Institute of Technology (MIT), I developed exploratory multi-modal interfaces using speech/audio processing, machine vision, environmental audio classification, gesture recognition, and wearable computing for augmented teaching and learning, ubiquitous and contextual information awareness, and situational interaction in public spaces. In my research internships at Fuji-Xerox Palo Alto Research Laboratories (FXPAL) and Mitsubishi Electric Research Laboratory (MERL) in Cambridge, I worked closely with research scientists on devising cross-modal audio and gesture-based mobile interaction tools for enhancing classroom learning, while using machine learning for detection of dysarthric speech and models for voice-cloning to improve communication for people with speech impairments. These research projects explored natural modalities of human-computer interaction, while leveraging perceptual processing, contextual understanding, and continuous learning from real-world usage data to foster more socially engaging, inclusive, and human-centered design of technology.

In my dissertation work at MIT Media Lab, I developed ThinkCycle, an open-source and open access engineering design platform, while examining the role of policies and practices that spur distributed, collaborative, and sustainable design innovation. After completing my doctoral degree, I co-founded the first ever open-source clinical informatics startup, Akaza Research in Cambridge, funded in-part by Small Business Innovation Research (SBIR) grants awarded by National Institutes of Health (NIH). We worked with clinical researchers at Massachusetts General Hospital (MGH) and MIT Broad Institute to develop open-source repositories and interoperable tools to support collaborative biomedical research. I subsequently directed new product development on statistical software tools and data visualization for simulating phase I & phase II clinical trials at the MIT startup, Cytel. I served as Principal Research Scientist in AI & Human Computer Interaction at Dataminer in New York City, on real-time human-augmented and machine learning-based analysis of social media data. In all these contexts, while pushing the envelope of research and technology innovation was often paramount, I found myself critically engaging with social, ethical and societal implications leading me to undertake human-centric design, participatory and ethnographic research, and explore the challenges, risks, and novel models for intellectual property, privacy, and cooperative interactions.

At Aalto University, as part of the consortia research project on Designing Inclusive & Trustworthy Digital Public Services for Migrants in Finland (Trust-M), my interdisciplinary team of researchers in machine-learning, HCI and design engaged in prototyping and developing tools for AI-mediated collaboration between migrants and service advisors at the City of Espoo. Based on extensive design research with end-users the team developed a prototype for a Human-AI collaborative system that mediates interactions among service advisors and migrants by listening into one-on-one sessions, offering salient summaries and real-time guidance (on a shared screen) on relevant knowledge related to the discussion from other experts and municipal information sources. The system utilized real-time speech processing, summarization, collaborative visual narratives, and question-answer retrieval using a combination of open source LLMs and closed-domain Knowledge Graphs (KGs), with information extracted from employment and health-related websites and training documents. Our findings indicated the crucial role of leveraging peer-based knowledge between (and within) service advisors, domain experts, and migrants, facilitated by AI-based tools. Our exploratory research offered insights into human-centered AI integration in the migration process, highlighting both opportunities and challenges of using AI-mediated communication and human-AI collaborative modes of interaction. The findings were published in a recent paper at CSCW 2024: “Enhancing Conversations for Migrant Counseling: Designing for Trustworthy AI-mediated Collaboration between Migrants and Service Advisors”. Future work includes facilitating conversational speech-based AI interaction with distributed experts, i.e. both human and AI-based agents in the loop.

At Aalto University I helped establish the focus area on Human-Centered Interaction and Design (HCID) to foster collaborative research and teaching in this domain. I have also taught courses on Conversational AI and Voice Interaction along with my colleague Prof. Tom Bäckström in the Department of Information and Communications Engineering (DICE), which led to our collaboration on the Trust-M consortia research project with the University of Helsinki, Tampere University, and the City of Espoo. In the future, I hope to continue expanding on this work with others to explore greater possibilities for multi-modal human-AI collaboration and their implications for creating more ethical, inclusive, and trustworthy conversational AI interactions in real-world domains as mentioned below.

B. Inclusive, Trustworthy and Responsible AI in Society

How should we develop more inclusive, trustworthy and responsible AI systems and services that are accountable to civil society? How do we design better value-aligned AI models using trustworthy data and incorporate them into innovative Public Sector AI services with multi-stakeholder participation, monitoring, and auditability while ensuring they are aligned with regulatory provisions and societal needs? How do we devise novel systems that improve transparency, explainability, accountability and good governance, and offer both providers and users tools to better understand their implications and influence their adoption and mitigate potential risks to society? How do we understand the societal implications of emerging AI systems and inter-related regulatory frameworks, including the EU AI Act and EU Digital Services Act, for public/private sector stakeholders, end users, and citizens? How do we leverage machine learning methods and generative AI to identify complex forms of disinformation and hate speech in online discourses, while seeking to reduce the effects of polarization, improve democratic participation and inclusion of everyday citizens and vulnerable communities (including minorities and migrants) for equitable and democratic societies to thrive?

These are some of the critical challenges we’ve been examining in our Critical AI and Crisis Interrogatives (CRAI-CIS) research group at Aalto University since 2020, with an interdisciplinary team of researchers with diverse backgrounds in computer science, design research, linguistics, media/communication studies, sociology, social psychology, law and policy. Our work has been generously supported by research grants from Kone Foundation, the Research Council of Finland, the Strategic Research Council (SRC), Helsingin Sanomat Foundation, and the Ministry of Education and Culture’s (MEC) Global Program Pilots for India and USA. The interdisciplinary teams have worked with societal stakeholders and collaborators in many exploratory pilot and consortium research projects with several university, public sector partners, and citizen groups. These include: 1) Reconstructing Crisis Narratives for Trustworthy Communication and Cooperative Agency, jointly conducted with the Finnish Institute for Health and Welfare (THL) to analyze crisis narratives in the media during the COVID-19 pandemic using qualitative research and social network analysis for improving public health outcomes; 2) Civic Agency in AI? Examining the AI Act and Democratizing Algorithmic Services in the Public Sector (CAAI) in partnership with Kela Innovation Unit, Social Welfare Services, 3) Designing Inclusive & Trustworthy Digital Public Services for Migrants in Finland (Trust-M) in partnership with University of Helsinki, Tampere University, and the City of Espoo, and 4) Liquid Forms of AI-infused Disinformation: Improving Information Resilience in the Finnish News Ecosystem, conducted in partnership with University of Helsinki, YLE News Lab, and Faktabaari to develop computational tools and practices for detecting and countering AI-based disinformation.

Making Sense of Misinformation in Media Narratives during Crisis. The emergence of a crisis is often accompanied by unexpected events, uncertain signals, malicious misinformation, and conflicting reports that must be collectively interpreted and analyzed to understand the complex nature of the situation and its potential implications for society. In the Reconstructing Crisis Narratives project, supported by a 3-year award from the Research Council of Finland, I led a collaboration with THL to analyze crisis narratives during the COVID-19 pandemic using mixed-methods, combining qualitative research for narrative inquiry with computational data analytics of crisis discourses in news and social media among diverse publics. Since December 2020 a team of multi-disciplinary researchers (media scholars, social scientists, linguists, data scientists, and public health experts) collected data on Twitter/X, official press releases, and news sources while devising methods to analyze narratives of the COVID-19 pandemic in Finland, in relation to global discourses. We created open datasets and designed a platform to represent and visualize complex crisis narratives to engage decision-makers, journalists, and health communication experts in making sense of crises, public sentiments and anxieties, and societal perceptions of risk, responsibility and trust. We have published several journal articles modeling misinformation in COVID-19 narratives among communities on Twitter/X, examining the impact of malicious bots on public health communication, and long-term assessment of social amplification of risk of misinformation and vaccine stance for public health agencies like THL, to proactively shape public health policies and societal outcomes.

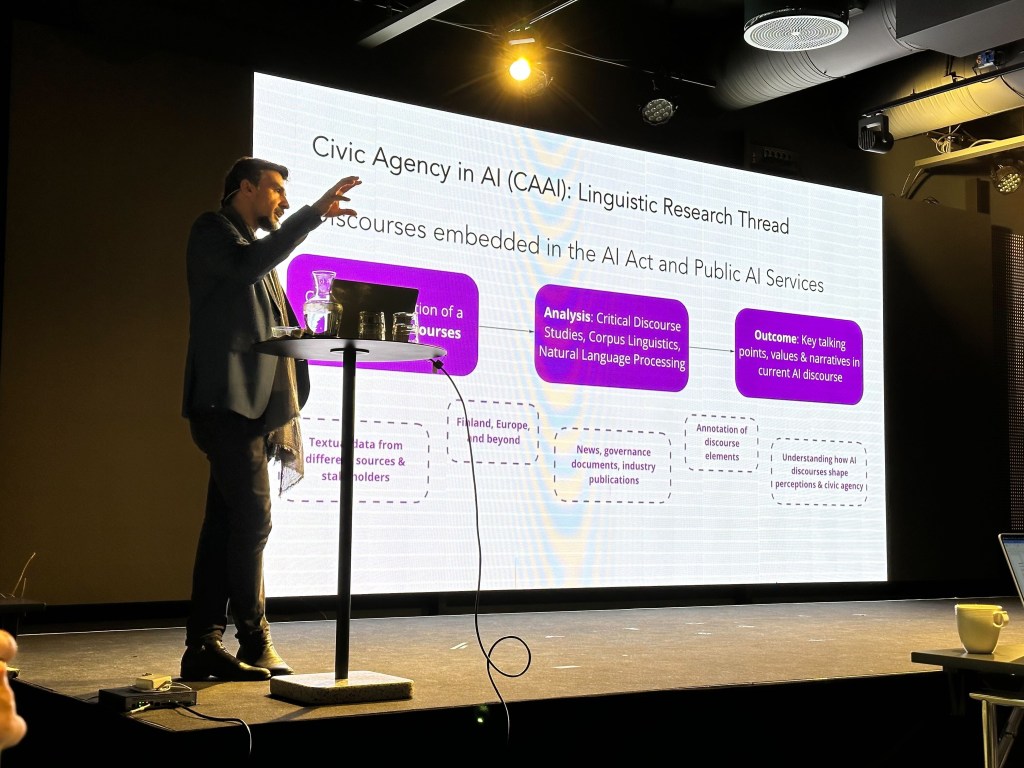

Democratizing Responsible Public AI Services in the EU. The public sector is increasingly embracing algorithmic decision-making and data-centric infrastructures to offer innovative digital services to citizens. As public AI services become more prevalent and affect citizens’ lived experiences, we must critically question their social, political, and ethical implications on rights, risks, and responsibilities for providers and recipients, particularly the most vulnerable in society. To advance more democratic and citizen-centric digital infrastructures I am leading the project, Civic Agency in AI (CAAI) funded by 4-year awards from the Kone Foundation and Research Council of Finland, to engage citizens’ algorithmic literacy, agency and participation in the design and development of AI services in the Finnish public sector. This research assesses perspectives and practices among experts, providers and citizens for an inclusive ecosystem of future AI governance. The European Commission’s proposed AI Act has raised debates on the implications of this regulatory framework across the EU. We created annotated corpus of texts on public AI services and used Critical Discourse Analysis (CDA) and natural language processing to examine diverse values, narratives, and positions. We published this work on “Emerging AI Discourses and Policies in the EU: Implications for Evolving AI Governance” in the CCIS Journal (2023). We are currently conducting case studies of emerging public AI services with Kela Social Services to highlight practices and challenges for wider adoption of AI in the public sector. Through computational social science we can critically examine changing public discourses on AI and democratization of public AI services.

AI Regulatory Sandboxes are emerging as a framework of technologies and practices that we have been examining for responsible development and validation of AI systems in conjunction with developers, providers and regulators across the AI lifecycle. AI Regulatory Sandboxes offer a model for assessing lawful compliance of AI-based systems, based on real-world experimentation, clarifying or minimizing legal uncertainty and allowing authorities to consider novel provisions to existing laws to support their adoption. Such sandboxes can foster experimentation, co-learning, and multi-stakeholder participation, particularly in high-risk domains; they require partnerships with public and private organizations in relevant domains (Annex III of AI Act); key stakeholders include market surveillance authorities, EU AI Office, and users of AI-based services. Software frameworks like Machine Learning Operations (MLOps) support continuous agile development and auditability across the AI lifecycle, as we emphasize in our journal article, “Role of AI Regulatory Sandboxes and MLOps for Finnish Public Sector Services” (2023) in The Review of Socionetwork Strategies (RSS). I have been invited to present our findings at global sandbox forums with the new EU AI Office in Brussels. I have been in dialogue with Sitra, Ministry of Economic Affairs and Employment, and the Ministry of Transport and Communications (LVM) to pilot domain-specific AI Regulatory Sandboxes in Finland. It would also require working closely with partners like the CSC – IT Center for Science and Testing & Experimentation Facilities (TEFs) to establish innovative technology infrastructures for AI sandboxes.

I have been an active member of the AI in Society research program at the Finnish Center for Artificial Intelligence (FCAI) and established the Digital Ethics, Society and Policy (Digital ESP) research area at Aalto University, which led to creation a new minor in Information Networks. I co-organized the Contestations.AI: Transdisciplinary Symposium on AI, Human Rights and Warfare in Helsinki on October 23, 2024. I’m currently serving on the steering committee of the Global AI Policy Research Network established by the AI Policy Lab at Umeå University, Sweden and the MILA-Quebec Artificial Intelligence Institute in Montreal, Canada; we recently published our report, “Roadmap for AI Policy Research” (January 30, 2025), and plan to host an international summit at TU Delft in November 2025.

C. Researching Co-Creative Learning and Expression

How can new forms of pedagogy and participatory engagement with AI and data-centric concepts promote critical, playful, and inclusive algorithmic literacy and digital citizenship among young learners? In collaboration with my colleague Teemu Leinonen at Aalto University I led a pilot project to explore these questions through a transnational collaboration with researchers at the Interact research group at University of Oulu, the Indian Institute of Science (IISc) and ARTPARK in Bangalore, India. Through participatory workshops with young learners and educators in non-formal after-school contexts, we explored pedagogical approaches using algorithmic literacy, robotics and generative AI to nurture creative coding, curiosity, and critical thinking about the role of AI in society. Teemu and I also published a journal article on using augmented reality sandboxes to foster children’s play and collaborative storytelling. At Georgia Tech I led the development of mobile interfaces for distributed digital learning in classroom settings, while at MIT I lead a team that developed mobile interfaces for participatory media storytelling. At Aalto University I collaborated with my colleague Koray Tahiroğlu in the Sound and Physical Interaction (SOPI) research group to examine co-creative practices in conjunction with AI-based algorithms for experimental sound and music expression.

Empowering children and young learners with multimodal AI-based tools for co-creation can be highly engaging and playful but must be carefully devised with teachers and educators to ensure it is adopted in ethical and pedagogically sound ways. Hence, its crucial to not only develop innovative tools but validate their pedagogical value empirically. I have been serving as an Associate Editor for the International Journal of Child Computer Interaction (IJCCI) and have served on the steering committee for the International Conferences on Interaction Design and Children (IDC) since 2013. Along with colleagues at the Interact research group at University of Oulu, I helped establish a special interest group on Children and AI at FCAI to foster greater research on this topic in Finland.

In my research group as part of our Trust-M research project with the City of Espoo, my team has been examining the needs of young migrants as they navigate their cultural adaptation process through participatory design of LLM-based conversational agents (CAs), while observing the intersections and tensions between the needs of end-users, stakeholders, and government policies related to cultural adaptation. The research offers implications for implementation of chatbots to support migrants’ cultural adaptation, and how culturally sensitive LLMs should be developed in a co-creative manner. Our findings will be published in the forthcoming paper: “Into the Unknown: Leveraging Conversational AI with Large Language Models in Supporting Young Migrants’ Journeys Towards Cultural Adaptation” and presented at the ACM CHI Conference in Yokohama, Japan.

As Large Language Models (LLMs) emerge as potential co-creative and co-learning conversational agents, they promise more fluid, adaptive educational interactions than traditional intelligent tutoring systems. However, the extent to which LLM behavior aligns well with human tutoring patterns remains poorly understood. Recent thesis research in my group examined this tension between fluid interfaces and fixed behavioral patterns in human teachers vs. AI-based tutoring. Through systematic analysis of the CIMA language learning open dataset, the research evaluated tutoring action distributions and response patterns between human teachers and three state-of-the-art LLMs. These findings suggest that while LLMs enable fluid interaction, they use over-explanatory and fixed tutoring patterns distinct from reflexive turn-taking human teaching strategies. In future work we must design conversational AI systems not as a replacement for human teachers, but to support engaging forms of co-learning, co-teaching, and evaluation as a co-creative process between human and AI-based pedagogical agents.

My intellectual identity has been shaped by an unconventional trajectory spanning science-technology, media-arts and cultural studies, and international human rights advocacy. The crucial shifts and accomplishments along the way were only possible with the guidance and mentorship of many scholars, practitioners and collaborators (including my students). This digital portfolio provides a glimpse into the ongoing transdisciplinary approach and critical ethos emerging in my research, teaching and creative practice.

About | Research Projects | Creative Practice | Teaching | Awards & Grants

Copyright All Rights Reserved © 2025