Honored to be appointed as docent by the University of the Arts Helsinki, which is a recognition awarded for significant research or artistic merits in the Finnish / European university systems.

I’ve been fortunate having an opportunity to join the newly established Uniarts Research Institute as a visiting researcher over the past year, interacting with many engaging faculty colleagues, doctoral, and postdoc researchers.

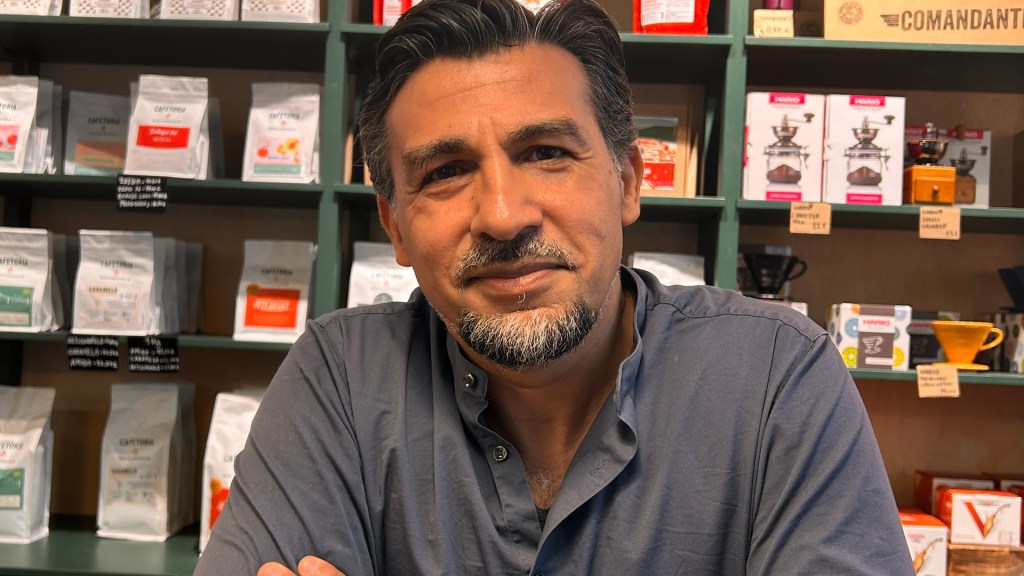

An extended interview published by Uniarts profiles my background “shaped by an unconventional trajectory from science and technology to media arts and cultural studies while engaging with conflict and social justice” and ongoing exploratory directions in critical AI, including a documentary film project I’m currently conceptualizing.

Read more here: https://www.uniarts.fi/en/articles/interviews/nitin-sawhney-ai-is-just-a-tool-whats-important-is-to-focus-on-what-we-actually-want-to-achieve/

It’s a surprisingly personal profile, so I’m sharing some relevant excerpts:

“I’m interested in how we’re able to address social injustice, ecological crises or conflicts. Our toolbox needs interdisciplinary research that combines social science, design, artistic practices, law and technological tools like AI. That’s the sort of culture I tried to foster in my research group at Aalto University.”

Sawhney believes it is more important to focus on how we understand and tackle complex societal conditions, rather than the tools or technologies we develop and deploy.

“I’m not so much interested in AI itself, but rather in nurturing new possibilities between humans and machines.”

He believes cooperative human-AI learning and expression offer more interesting possibilities for research, citing examples he has worked on such as use of AI as a co-creative partner in music and sound composition, or supporting human counselling services as a knowledgeable partner.

“When it comes to using and developing generative AI systems, we should be much more cognizant of their limitations and be able to better understand and influence their emerging outcomes. If AI reduces our agency or critical thinking, which is what a recent MIT study of ChatGPT use by young students found, then we are heading in the wrong direction.”

Sawhney believes that the uncritical use of large language models (LLMs) entails worrying features. He lists problems such as bias, disinformation, privacy and copyright infringement, as well as the ecological impact, but also how such AI data infrastructures controlled by a handful of big tech companies diminishes the democratic agency and oversight of civil society.

“I notice that even my colleagues use them without questioning it. I don’t use commercial LLMs or chatbots in my research, teaching or personal work.”

Sawhney’s research team has examined the use of Knowledge Graphs (KGs) as an alternative way to complement LLMs to provide more trustworthy and explainable outcomes. They organise data as a network-like structure, simultaneously emphasising meanings and relationships of things.

Sawhney has become increasingly concerned with the role of AI in technologised warfare and human rights violations. Over the past year he organised interdisciplinary symposia (Contestations.AI) and international workshops to draw critical perspectives from researchers, journalists, social scientists, filmmakers and artists.

While at Uniarts Helsinki, Sawhney has begun conceptualising a documentary film, titled “Where’s Daddy?”, that examines the role of artificial intelligence in conflicts and warfare, and how it dehumanises people’s lives affected by war.

Curious to hear your thoughts on all this, and feel free to reach out if you’d like to have a conversation sometime or engage in something collaborative.